Retrieval-Augmented Generation (RAG) has become an indispensable tool for organizations looking to unlock the potential of unstructured knowledge. By combining retrieval with generative AI, RAG systems empower businesses to answer complex queries, summarize vast data, and deliver contextually relevant insights. However, despite their promise, RAG pipelines often fall short due to limitations in retrieval, augmentation, and generation phases.

In this post, we’ll explore practical steps to address common RAG challenges and build robust pipelines that overcome RAG limitations. These foundational techniques—diversifying retrieval, combining semantic and keyword search, and reranking—are designed to help you optimize your RAG system’s performance. These RAG optimization techniques provide fast, cost effective and impactful solutions that are accessible even with smaller models.

For a deeper dive into the technical details, check out the accompanying workshop video and GitHub repository.

Challenges in RAG Pipelines

RAG pipelines are only as strong as their weakest phase. While their innovative architecture combines retrieval with generation, naive implementations often encounter significant challenges:

- Retrieval Issues: Irrelevant, redundant, or incomplete data fetched by simplistic methods.

- Augmentation Gaps: Poor contextual alignment that fails to bridge raw data and nuanced queries.

- Generation Shortcomings: Misleading, incomplete, or contextually off-target outputs caused by flawed inputs.

The consequences of these limitations ripple across the business landscape, leading to slower decision-making, reduced customer satisfaction, and missed compliance requirements. Addressing these challenges starts with refining the retrieval and augmentation phases, the foundational layers of any RAG system.

Practical Steps to Overcome RAG Limitations

Step 1: Diversify Retrieval Results with MMR

Maximal Marginal Relevance (MMR) is a technique designed to balance relevance and diversity in retrieved documents. By avoiding excessive similarity between results, MMR ensures that your pipeline captures a broader range of useful information.

- How it works: MMR ranks documents not just by relevance to the query but also by their novelty compared to already selected documents.

- Practical Impact: Reduces redundancy in retrieved documents, improving the overall quality of the retrieved context.

Step 2: Combine Semantic and Keyword Search with Hybrid Retrieval

Hybrid retrieval blends two powerful methods: BM25 for keyword-based relevance and embeddings for semantic similarity. This combination ensures your pipeline retrieves documents that are both topically relevant and contextually rich.

- How it works: BM25 scores documents based on exact term matches, while embeddings capture deeper relationships through vector similarity.

- Practical Impact: Increases retrieval accuracy by capturing domain-specific terminology and broader contextual relevance.

Step 3: Prioritize Top Results with Reranking

Reranking applies advanced machine learning models, such as cross-encoders, to reorder the retrieved documents based on contextual relevance. This step focuses on pushing the most important documents to the top.

- How it works: A cross-encoder evaluates the relationship between the query and each document, assigning a relevance score.

- Practical Impact: Ensures that the top results are the most valuable for answering complex queries, reducing noise and improving precision.

By integrating these three steps, RAG pipelines can address retrieval challenges head-on, laying the groundwork for better augmentation and generation.

Why These Steps Matter

These practical refinements are simple yet highly effective. Unlike advanced generation techniques that require significant computational resources, improving retrieval is cost-effective and scalable. For organizations leveraging smaller models, these steps make it possible to deliver precise and reliable outputs without overhauling existing infrastructure.

Case Study: Workshop Recap

During our recent workshop, we demonstrated how these foundational techniques improve RAG performance. Using a 7-billion-parameter model, we optimized a RAG pipeline to handle real-world transformer specifications. By enhancing retrieval diversity, combining hybrid methods, and reranking results, the pipeline delivered precise and contextually accurate answers—despite the constraints of the smaller model.

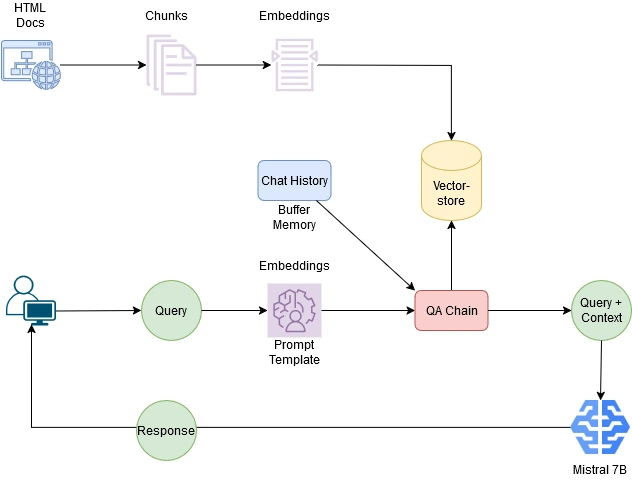

The following diagram provides an overview of the pipeline architecture we used during the workshop:

Explanation of the Diagram:

- Document Processing: Raw documents (e.g., PDFs or HTML files) are chunked and embedded for storage in a vector database.

- Retrieval Process: When a user submits a query, relevant chunks are retrieved using techniques such as MMR, hybrid retrieval, and reranking.

- Generation Phase: The retrieved context is passed to the QA chain along with the query for response generation using the language model.

- Additional Components:

- Buffer memory stores chat history for conversational context.

- The QA chain uses a customizable prompt template to format the input for the model.

One of the key insights from our workshop was that RAG pipelines are only as strong as their weakest phase. Optimizing retrieval and augmentation builds a solid foundation for accurate and reliable results, even when using smaller models.

Key Takeaways from the Workshop:

- Challenges in RAG:

- Retrieving irrelevant, redundant, or incomplete data.

- Poor contextualization of retrieved information.

- Generating incomplete or misleading outputs.

- Pipeline Optimization:

- Improvements at the embedding and retrieval stages are simpler, cost-effective, and highly impactful.

- Techniques we explored include:

- MMR: Diversifies retrieval results.

- Hybrid Retrieval: Combines keyword relevance with semantic similarity for better accuracy.

- Reranking: Elevates the most relevant content for higher precision.

- Lessons Learned:

- Quality retrieval is the foundation of RAG success.

- Incremental optimizations lead to significant gains.

- Combining strategies improves both relevance and output accuracy.

- Optimized pipelines enable smaller models to deliver precise, contextually relevant answers efficiently.

For a hands-on demonstration, watch the workshop video or explore the GitHub repository containing all the code and examples.

Outlook for Future Techniques

While the steps covered here provide a solid foundation, RAG optimization is a continuous journey. Advanced techniques such as query rewriting, reflective RAG, and agentic RAG systems promise even greater improvements in handling complex queries and dynamic contexts. Stay tuned for future posts where we’ll dive into these cutting-edge approaches.

Conclusion

Optimizing RAG pipelines doesn’t have to be overwhelming. By starting with simple steps like MMR, hybrid retrieval, and reranking, organizations can significantly enhance their systems’ performance. These improvements are not only cost-effective but also yield measurable business benefits in accuracy, speed, and customer satisfaction.

Explore the workshop video and GitHub repository to take the next step in building a robust RAG pipeline for your organization.

Acknowledgment

This workshop was proudly organized in collaboration with KI Park, where we explored practical steps to optimize RAG pipelines. Special thanks to KI Park for hosting the event and making the video available on their channel. The workshop also featured an insightful session on multimodal RAG techniques presented by Taras Hnot from SoftServe. Their expertise in combining retrieval with multimodal capabilities provided a comprehensive view of RAG’s potential in diverse applications.